Shaping the Future

Robotics and Artificial Intelligence Advancements at Georgia Tech are Creating Entirely New Paradigms of What Computing Technology Can Do

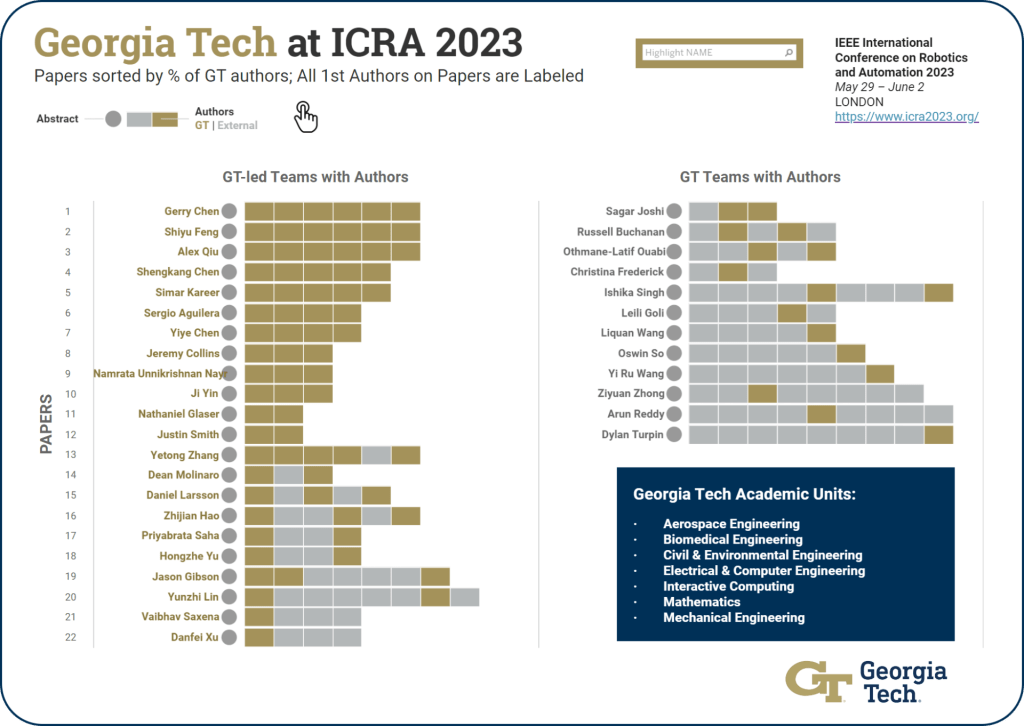

Georgia Tech at ICRA 2023

May 29 -June 2 | London

Pictured above: Georgia Tech ‘robot alum’ Curi turns 10 this year.

Georgia Tech @ ICRA is a joint effort by:

Institute for Robotics and Intelligent Machines •

Machine Learning Center • College of Computing

More than 70 researchers from across the College of Engineering and College of Computing are advancing the state of the art in robotics.

Discover the Experts at ICRA

Georgia Tech at ICRA 2023

Georgia Tech is a leading contributor to ICRA 2023, a research venue focused on robotics and advancements in the development of embodied artificial intelligence.

*by number of papers

Partner Organizations

Air Force Research Laboratory • Amazon Robotics • Aurora Innovation • Autodesk • California Institute of Technology • Caltech • CCDC US Army Research Laboratory • Clemson • Columbia University • ETH Zurich • Free University of Bozen-Bolzano • Google Brain • Instituto Tecnologico Y De Estudios Superiores De Monterrey • Johns Hopkins University • MIT • NASA Jet Propulsion Laboratory • NJIT • NVIDIA • Samsung • Simon Fraser University • Stanford • Toyota Research Institute • Umi 2958 Gt-Cnrs • Université De Lorraine • University of British Columbia • University of California, Irvine • University of California, San Diego • University of Edinburgh • University of Illinois Urbana-Champaign • University of Maryland • University of Oxford • University of Southern California • University of Toronto • University of Washington • University of Waterloo • USC Viterbi School of Engineering • USNWC PC

FEATURED RESEARCH

Researchers Use Novel Approach to Teach Robot to Navigate Over Obstacles

By Nathan Deen

Quadrupedal robots may be able to step directly over obstacles in their paths thanks to the efforts of a trio of Georgia Tech Ph.D. students.

“The main motivation of the project is getting low-level control over the legs of the robot that also incorporates visual input,” said Yokoyama, a Ph.D. student within the School of Electrical and Computer Engineering. “We envisioned a controller that could be deployed in an indoor setting with a lot of clutter, such as shoes or toys on the ground of a messy home. Whereas blind locomotive controllers tend to be more reactive — if they step on something, they’ll make sure they don’t fall over — we wanted ours to use visual input to avoid stepping on the obstacle altogether.”

(Photo: Kevin Beasley/College of Computing)

RESEARCH TEAM

(pictured at top, from 1st row, left to right):

Ph.D. student in computer vision

Ph.D. student in robotics

Ph.D. student in robotics

Assistant Professor

Interactive Computing

Associate Professor

Interactive Computing

Together We Swarm

Georgia Tech’s Yellow Jackets are tackling robotics research from a holistic perspective to develop safe, responsible AI systems that operate in the physical and virtual worlds. Learn about research areas and teams in the chart. Continue below to meet some of our experts and learn about their latest efforts.

Featured Research

Asst. Professor, Electrical and Computer Engineering

Multi-Robot Systems

Together We Swarm, In Research & Robots

“Microrobots have great potential for healthcare and drug delivery, however these applications are impeded by the inaccurate control of microrobots, especially in swarms.

By collaborating with roboticists, we were able to ‘close the gap’ between single robot design and swarm control. All the different elements were there. We just made the connection.”

Research

Systematically designing local interaction rules to achieve collective behaviors in robot swarms is a challenging endeavor, especially in microrobots, where size restrictions imply severe sensing, communication, and computation limitations. New research demonstrates a systematic approach to control the behaviors of microrobots by leveraging the physical interactions in a swarm of 300 3-mm vibration-driven “micro bristle robots” that designed and fabricated at Georgia Tech. The team’s investigations reveal how physics-driven interaction mechanisms can be exploited to achieve desired behaviors in minimally equipped robot swarms and highlight the specific ways in which hardware and software developments aid in the achievement of collision-induced aggregations.

Why It Matters

- Microrobots have great potential for healthcare and drug delivery, however these applications are impeded by the inaccurate control of microrobots, especially in swarms.

- The new published work is the first demonstration of motility-induced phase separation (MIPS) behaviors on a swarm robotic platform.

- This research promises to help overcome current constraints in the deployment of microrobots, such as limited motility, sensing, communication, and computational limitations.

Home Bots

Giving Robots a Way to Connect by Seeing and Sensing

“Future robots that operate in homes and around people will need to connect via touch with the external world, requiring expensive sensors. Our method dramatically lowers the cost of these touch sensors, making robots more accessible, capable, and safe.”

Ph.D. Student, Mechanical Engineering

Research

Force and torque sensors are commonly used today to give robots a sense of touch. They are often placed at the “wrist” of a robot arm and allow a robot to precisely sense how much force its gripper applies to the world. However, these sensors are expensive, complex, and fragile.

To address these problems, we present Visual Force/Torque Sensing, a method to estimate force and torque without a dedicated sensor. We mount a camera to the robot which focuses on the gripper and train a machine learning algorithm to observe small deflections in the gripper and estimate the forces and torques that caused them. While our method is less accurate than a purpose-built force/torque sensor, it is 100x cheaper and can be used to make touch-sensitive robots that accomplish real-world tasks.

Why It Matters

- Currently, robots are programmed to avoid obstacles, and touching the environment or a human is often viewed as a failure.

- Future robots that operate in homes and around people will need to make contact with the world to manipulate objects and collaborate with humans.

- Currently available touch sensors are expensive and impractical to mount on home robots.

- This work proposes a method to replace these expensive force sensors with a simple camera, allowing robots to be touch-sensitive around humans.

- New method dramatically lowers the cost of these touch sensors, making robots more accessible, capable, and safe.

- New method is useful for potential applications in healthcare, such as making a bed and cleaning surfaces.

Ph.D. Student, Interactive Computing

Cross-Applications

From Graffiti to Growing Plants: Art Robot Retooled for Hydroponics

“This project highlights how Georgia Tech’s commitment to interdisciplinary research has led to unexpected applications of seemingly unrelated technologies, and serves as a testament to the value of exploring diverse fields of study and collaboration in order to develop innovative solutions to real-world problems.”

Research

Researchers have applied technology they developed for robotic art research to a hydroponics robot. The team originally developed a cable-based robot designed to spray paint graffiti on large buildings. Because the cable robot technology scales well to large sizes, the same robot became an ideal fit for many agricultural applications.

Through a collaboration with the N.E.W. Center for Agriculture Technology at Georgia Tech, a robotic plant phenotyping robot was built, tested, and deployed in a hydroponic pilot farm on campus. The robot takes around 75 photos of each plant every day as the plant grows, then uses computer vision to construct 3D models of the plants which can be used to non-destructively estimate properties such as crop biomass and a photosynthetically active area. This allows for tracking, modeling, and predicting plant growth.

Why It Matters

- Efficiency of resources in agriculture – using less water and fertilizer to produce more food – is becoming increasingly important.

- Advancements in agricultural efficiency are driven by better understanding of plant growth and substrate dynamics. There is a need to understand how plants grow to know how much to feed them.

- This work addresses plant growth model accuracy; accuracy is currently limited by (1) the difficulty in collecting large-scale detailed data and (2) “noisy” data due to genetic and environmental variations.

- Robotics is advanced by combining two existing robot architectures – cable robots and serial manipulators – and using field tests to autonomously image plants at high quality from many angles.

- In leveraging computer vision algorithms, plant images can be used to generate measurements that are accurate enough to be used in developing more advanced plant growth models.

Agriculture

Applying a Soft Touch to Berry Picking

“Our research in automated harvesting can be applied to soft fruits — such as blackberries, raspberries, loganberries, and grapes — that require intensive labor to harvest manually. Automating the harvesting process will allow for a drastic increase in productivity and decrease in human labor and time spent harvesting.”

B.S. Student, Mechanical Engineering

Research

New research outlines the design, fabrication, and testing of a soft robotic gripper for automated harvesting of blackberries and other fragile fruits. A robotic gripper utilizes three rubber-based fingers that are uniformly actuated by fishing line, similar to how human tendons function. This gripper also includes a ripeness sensor that utilizes the reflectance of near-infrared light to determine ripeness level, as well as an endoscopic camera for blackberry detection and providing image feedback for robot arm manipulation. The gripper was used to harvest 139 berries with manual positioning in two separate field tests. The retention force – the amount of force required to detach the berry from the stem – and average reflectance value was able to be determined for both ripe and unripe blackberries.

The soft robotic gripper was integrated onto a rigid robot arm and successfully harvested fifteen artificial blackberries in a lab setting using visual servoing, a technique that allows the robot arm to iteratively approach the target using image feedback.

Why It Matters

- In the blackberry industry, about 40-50% of total labor hours are spent in maintaining and harvesting the crops; harvesting blackberries contributed to ~56% of the total cost of bringing blackberries to the market.

- Automating the harvesting process will allow for a drastic increase in productivity and decrease in human labor/time spent harvesting.

- Very few studies have been conducted on automated harvesting for soft fruits and blackberries. This research acts as a proof of concept and spearhead for more technological development in this area.

- The research can be applied to other soft fruits such as raspberries, loganberries, grapes, etc.

RESEARCH & ACTIVITIES

Georgia Tech is working with more than 35 organizations on robotics research in ICRA’s main program. Explore the partnerships through the network chart and see the top institutions by number of collaborations.

See you in London!

Project Lead and Web Development: Joshua Preston

Writer: Nathan Deen

Photography and Visual Media: Kevin Beasley, Gerry Chen, Jeremy Collins, Christa Ernst, Joanne Truong

Interactive Data Visualizations and Data Analysis: Joshua Preston