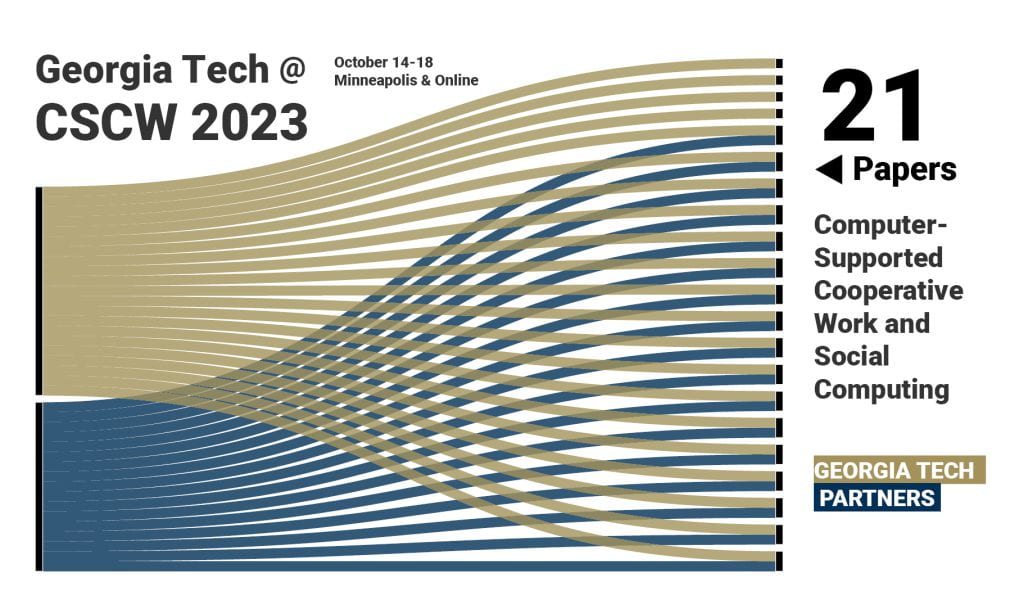

The ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW), Oct. 14 – 18, is a global research event for the field of computer science and human-computer interaction. CSCW focuses on the study of how people work together and how technology can support and enhance collaborative activities. Georgia Tech is a consistent contributor to the technical program and regularly a top 10 institution for number of accepted research papers.

CSCW 2023 welcomes 1200+ authors from 29 countries in the main technical program for demos, papers, and posters.

Georgia Tech is in the Top 5 of contributors based on accepted research in the program. The 21 papers that Georgia Tech experts coauthor account for about 8 percent of the papers program. Of the papers that Georgia Tech coauthors, 70 percent of 1st authors are from the institute.

People

Graduate Students

Katrina Alcala • Karthik S Bhat • Aditi Bhatnagar • Anthony D’Achille • Upol Ehsan • Sindhu Kiranmai Ernala • Rachit Gupta • Kaely Hall • Camille Harris • Chae Hyun Kim • Seunghyun Kim • Michael Koohang • Rachel Lowy • Marcus Ma • Niharika Mathur • Beatriz Palacios Abad • Sadie Palmer • Sachin Pendse • Alyssa Sheehan • Bingrui Zong • Tamara Zubatiy

Undergraduate Students

Rachit Gupta • Chae Hyun Kim

Alumni

Azra Ismail • Amber Johnson • Jiachen Li • Dong Whi Yoo

Research Reveals Small Businesses Can Struggle to Leverage Tech Benefiting Workers

By Nathan Deen

A new Georgia Tech study reveals that excluding front-line workers from the design process can increase employee turnover rates, leading to higher costs and reduced efficiency for small businesses implementing new automated technologies.

Alyssa Sheehan has seen firsthand how companies can struggle to leverage new technologies meant to improve systems and benefit workers. Making Meaning from the Digitalization of Blue-Collar Work — a best paper at CSCW 2023 — explores the impacts.

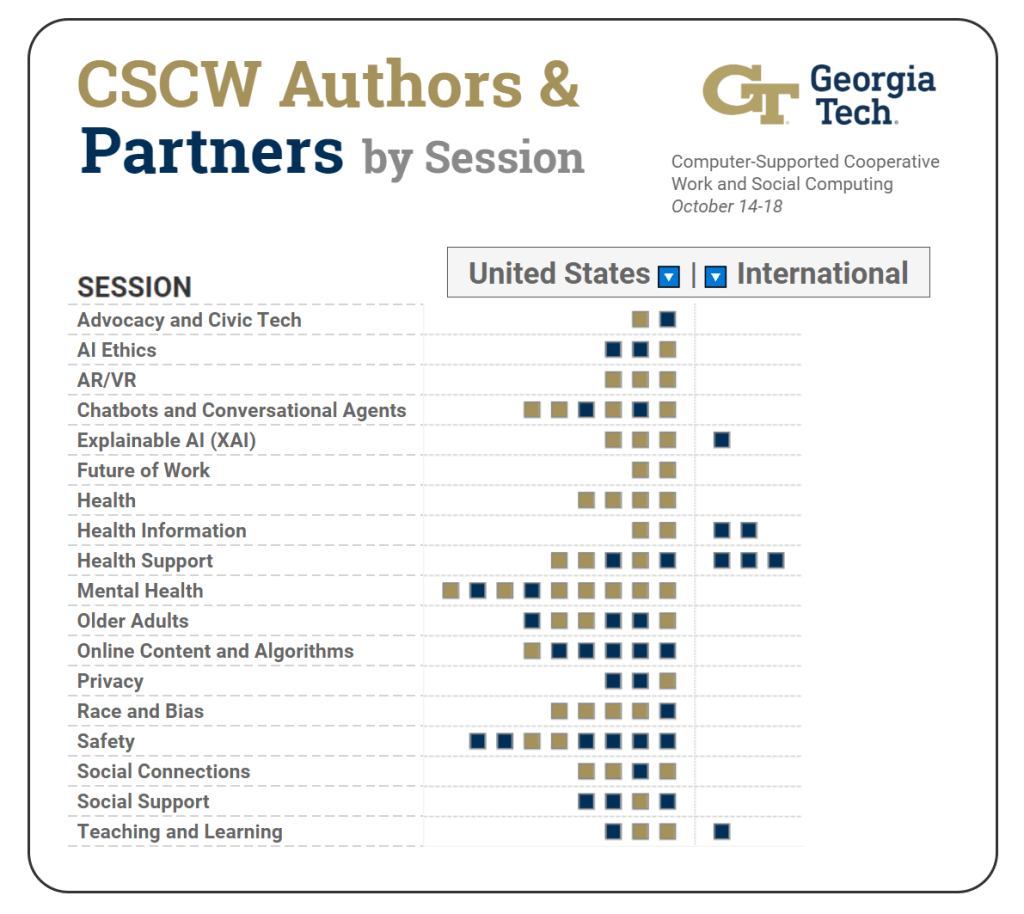

Sessions

Georgia Tech authors appear in almost a third of CSCW 2023 paper sessions. Mental health is the session with the most GT authors (7), followed by sessions on Chatbots and Conversational Agents; Health; and Race and Bias (4 each).

SESSIONS with GT Papers

Advocacy and Civic Tech • AI Ethics • AR/VR • Chatbots and Conversational Agents • Explainable AI (XAI) • Future of Work • Health • Health Information • Health Support • Mental Health • Older Adults • Online Content and Algorithms • Privacy • Race and Bias • Safety • Social Connections • Social Support • Teaching and Learning

Research

Advocacy and Civic Tech

More Than a Property: Place-based Meaning Making and Mobilization on Social Media to Resist Gentrification

Seolha Lee, Christopher Le Dantec

In this study, we examined the successes and challenges in online framing activities specific to place-based activism resisting urban redevelopment and gentrification. We conducted a qualitative analysis of online discourse on Twitter around the redevelopment of an old manufacturing district in Seoul, South Korea, and investigated how the place-based nature of the contested situation complicated the process of public mobilization. We found that the collective meaning-making based on individuals’ existing relationships to a contested place led the citizens who were neither the tenants nor property owners in the area to find the struggle relevant to their lives and to establish their agency in an effort to influence redevelopment policy. We argue that the capacity of online discourse that alters the social meaning of place and its ownership opens up an opportunity for technology to better support grassroots efforts to improve social justice in urban development and policy-making processes. Yet, the disputes we found from the online discussions revealed some limitations of online discourse in place-based activism, which led us to suggest research agendas for the CSCW community to address the limitations.

AI Ethics

Seeing Like a Toolkit: How Toolkits Envision the Work of AI Ethics

Richmond Wong, Michael Madaio, Nick Merrill

Numerous toolkits have been developed to support ethical AI development. However, toolkits, like all tools, encode assumptions in their design about what work should be done and how. In this paper, we conduct a qualitative analysis of 27 AI ethics toolkits to critically examine how the work of ethics is imagined and how it is supported by these toolkits. Specifically, we examine the discourses toolkits rely on when talking about ethical issues, who they imagine should do the work of ethics, and how they envision the work practices involved in addressing ethics. Among the toolkits, we identify a mismatch between the imagined work of ethics and the support the toolkits provide for doing that work. In particular, we identify a lack of guidance around how to navigate labor, organizational, and institutional power dynamics as they relate to performing ethical work. We use these omissions to chart future work for researchers and designers of AI ethics toolkits.

AR/VR

The Stage and the Theatre: AltspaceVR and its Relationship to Discord

Katrina Alcala, Anthony D’Achille, Amy Bruckman

Immersive virtual reality (VR) has seen growth in usage over the last few years and that growth is expected to accelerate. Correspondingly, many VR-based online communities have begun to emerge, and several social VR applications such as AltspaceVR have gained significant popularity. However, virtual reality can be isolating. Users can meet and connect with people in VR, but inevitably, when a user removes their headset, their friends are no longer there. In this paper, we look at how communities in AltspaceVR, a popular social VR application, handle this challenge. We conduct fourteen interviews and over 70 hours of participant observation and find that AltspaceVR users and communities have turned to Discord to solve many of their needs, such as facilitating more ubiquitous communication, planning community activities and AltspaceVR Events, and hosting casual social discussion. By using the communicative ecology model for our analysis, we find that AltspaceVR, Discord, and the communities that intersect the two have formed a tightly-coupled communicative ecology, which we call the “stage” and “theater”. Discord acts as the “theater,” where actors and crew collaborate and communicate to prepare for the main event, all the while building important social bonds. AltspaceVR acts as the “stage,” where those efforts manifest in ephemeral but high-value experiences that bring the community together. Finally, we compare the communities we studied with those found in massively-multiplayer online games (MMOGs) and provide insights regarding the design of social VR applications and online communities.

Chatbots and Conversational Agents

“I don’t know how to help with that” – Learning from Limitations of Modern Conversational Agent Systems in Caregiving Networks

Tamara Zubatiy, Niharika Mathur, Larry Heck, Kayci Vickers, Agata Rozga, Elizabeth Mynatt

While commercial conversational agents (CA) (i.e. Google assistant, Siri, Alexa) are widely used, these systems have limitations in error-handling, flexibility, personalization and overall dialogue management that are amplified in care coordination settings. In this paper, we synthesize and articulate these limitations through quantitative and qualitative analysis of 56 older adults interacting with a commercial CA deployed in their home for a 10 week period. We look at the CA as a compensatory technology in an older adult’s care network. We argue that the CA limitations are rooted in the rigid cue-and-response style of task-oriented interactions common in CAs. We then propose a redesign for CA conversation flow to favor flexibility and personalization that is nonetheless viable within the limitations of current AI and machine learning technologies. We explore design tradeoffs to better support the usability needs of older adults compared to current design optimizations driven by efficiency and privacy goals.

Explainable AI (XAI)

Charting the Sociotechnical Gap in Explainable AI: A Framework to Address the Gap in XAI

Upol Ehsan, Koustuv Saha, Munmun De Choudhury, Mark Riedl

Explainable AI (XAI) systems are sociotechnical in nature; thus, they are subject to the sociotechnical gap—divide between the technical affordances and the social needs. However, charting this gap is challenging. In the context of XAI, we argue that charting the gap improves our problem understanding, which can reflexively provide actionable insights to improve explainability. Utilizing two case studies in distinct domains, we empirically derive a framework that facilitates systematic charting of the sociotechnical gap by connecting AI guidelines in the context of XAI and elucidating how to use them to address the gap. We apply the framework to a third case in a new domain, showcasing its affordances. Finally, we discuss conceptual implications of the framework, share practical considerations in its operationalization, and offer guidance on transferring it to new contexts. By making conceptual and practical contributions to understanding the sociotechnical gap in XAI, the framework expands the XAI design space.

Future of Work

Making Meaning from the Digitalization of Blue-Collar Work

Alyssa Sheehan, Christopher Le Dantec

With rapid advances in computing, we are beginning to see the expansion of technology into domains far afield from traditional office settings historically at the center of CSCW research. Manufacturing is one industry undergoing a new phase of digital transformation. Shop-floor workers are being equipped with tools to deliver efficiency and support data-driven decision making. To understand how these kinds of technologies are affecting the nature of work, we conducted a 15-month qualitative study of the digitalization of the shipping and receiving department at a small manufacturer located in the Southeastern United States. Our findings provide an in-depth understanding of how the norms and values of factory floor workers shape their perception and adoption of computing services designed to augment their work. We highlight how emerging technologies are creating a new class of hybrid workers and point to the social and human elements that need to be considered to preserve meaningful work for blue-collar professionals.

Health

It Takes Two to Avoid Pregnancy: Addressing Conflicting Perceptions of Birth Control Pill Responsibility in Romantic Relationships

Marcus Ma, Chae Hyun Kim, Kaely Hall, Jennifer Kim

While birth control pills are one of the most common forms of contraception, their usage has several emotional and physical costs, such as taking the pill daily and experiencing hormonal side effects. The burden of these tasks in relationships generally falls on the pill user with minimal involvement from their partner. In this study, we conducted semi-structured interviews with pill users and their partners to investigate the differences between their perceived current and ideal divisions of birth control responsibility. During the interview, we presented a collaborative birth control tracking app prototype to examine how such technology can overcome these discrepancies. We found that pill users were unsatisfied with their partners’ engagement in contraceptive tasks but did not communicate this well. Meanwhile, partners wanted to contribute more to pregnancy prevention but did not know how. When presented with our app prototype, users and partners stated that our design could address these issues by improving communication between users and partners. In particular, users appreciated how the app would increase engagement and support from their partner, and partners liked that the app presented several concrete ways to become more involved and show emotional support. However, privacy issues exist given the sensitive nature of contraception. We discuss how design considerations should be kept in mind about privacy while recognizing pill users’ efforts and promoting partners’ involvement.

Health Information

Public Health Calls for/with AI: An Ethnographic Perspective

Azra Ismail, Divy Thakkar, Neha Madhiwalla, Neha Kumar

Artificial Intelligence (AI) based technologies are increasingly being integrated into public sector programs for decision-support and effective distribution of constrained resources. The field of Computer Supported Cooperative Work (CSCW) research has begun to examine how the resultant sociotechnical systems may be designed appropriately when targeting underserved populations. We present an ethnographic study of a large-scale real-world integration of an AI system for resource allocation in a call-based maternal and child health program in India. Our findings uncover complexities around determining who benefits from the intervention, how the human-AI collaboration is managed, when intervention must take place in alignment with various priorities, and why the AI is sought, for what purpose. Our paper offers takeaways for human-centered AI integration in public health, drawing attention to the work done by the AI as actor, the work of configuring the human-AI partnership with multiple diverse stakeholders, and the work of aligning program goals for design and implementation through continual dialogue across stakeholders.

Health Support

Supporters First: Understanding Online Social Support on Mental Health from a Supporter Perspective

Meeyun Kim, Koustuv Saha, Munmun De Choudhury, Daejin Choi

Social support or peer support in mental health has successfully settled down in online spaces by reducing the potential risk of critical mental illness (e.g., suicidal thoughts) of support-seekers. While the prior work has mostly focused on support-seekers, particularly investigating their behavioral characteristics and the effects of online social supports to support-seekers, this paper seeks to understand online social support from supporters’ perspectives, who have informational or emotional resources that may affect support-seekers either positively or negatively. To this end, we collect and analyze a large-scale of dataset consisting of the supporting comments and their target posts from 55 mental health communities in Reddit. We also develop a deep-learning-based model that scores informational and emotional support to the supporting comments. Based on the collected and scored dataset, we measure the characteristics of the supporters from the behavioral and content perspectives, which reveals that the supporters tend to give emotional support than informational support and the atmosphere of social support communities tend also to be emotional. We also understand the relations between the supporters and the support-seekers by giving a notion of “social supporting network”, whose nodes and edges are the sets of the users and the supporting comments. Our analysis on top users by out-degrees and in-degrees in social supporting network demonstrates that heavily-supportive users are more likely to give informational support with diverse content while the users who attract much support exhibit continuous support-seeking behaviors by uploading multiple posts with similar content. Lastly, we identified structural communities in social supporting network to explore whether and how the supporters and the support-seeking users are grouped. By conducting topic analysis on both the support-seeking posts and the supporting comments of individual communities, we revealed that small communities deal with a specific topic such as hair-pulling disorder. We believe that the methodologies, dataset, and findings can not only expose more research questions on online social supports in mental health, but also provide insight on improving social support in online platforms.

We are half-doctors: Family Caregivers as Boundary Actors in Chronic Disease Management

Karthik S Bhat, Amanda Hall, Tiffany Kuo, Neha Kumar

Computer-Supported Cooperative Work (CSCW) and Human–Computer Interaction (HCI) research is increasingly investigating the roles of caregivers as ancillary stakeholders in patient-centered care. Our research extends this body of work to identify caregivers as key decision-makers and boundary actors in mobilizing and managing care. We draw on qualitative data collected via 20 semi-structured interviews to examine caregiving responsibilities in physical and remote care interactions within households in urban India. Our findings demonstrate the crucial intermediating roles family caregivers take on while situated along the boundaries separating healthcare professionals, patients and other household members, and online/offline communities. We propose design recommendations for supporting caregivers in intermediating patient-centered care, such as through training content and expert feedback mechanisms for remote care, collaborative tracking mechanisms integrating patient- and caregiver-generated health data, and caregiving-centered online health communities. We conclude by arguing for recognizing caregivers as critical stakeholders in patient-centered care who might constitute technologically assisted pathways to care.

Mental Health

Discussing Social Media During Psychotherapy Consultations: Patient Narratives and Privacy Implications

Dong Whi Yoo, Aditi Bhatnagar, Sindhu Kiranmai Ernala, Asra Ali, Michael L. Birnbaum, Gregory Abowd, Munmun De Choudhury

Social media platforms are being utilized by individuals with mental illness for engaging in self-disclosure, finding support, or navigating treatment journeys. Individuals also increasingly bring their social media data to psychotherapy consultations. This emerging practice during psychotherapy can help us to better understand how patients appropriate social media technologies to develop and iterate patient narratives – the stories of patients’ own experiences that are vital in mental health treatment. In this paper, we seek to understand patients’ perspectives regarding why and how they bring up their social media activities during psychotherapy consultations as well as related concerns. Through interviews with 18 mood disorder patients, we found that social media helps augment narratives around interpersonal conflicts, digital detox, and self-expression. We also found that discussion of social media activities shines a light on the power imbalance and privacy concerns regarding use of patient-generated health information. Based on the findings, we discuss that social media data are different from other types of patient-generated health data in terms of supporting patient narratives because of the social interactions and curation social media inherently engenders. We also discuss privacy concerns and trust between a patient and a therapist when patient narratives are supported by patients’ social media data. Finally, we suggest design implications for social computing technologies that can foster patient narratives rooted in social media activities.

Marginalization and the Construction of Mental Illness Narratives Online: Foregrounding Institutions in Technology-Mediated Care

Sachin Pendse, Neha Kumar, Munmun De Choudhury

People experiencing mental illness are often forced into a system in which their chances of finding relief are largely determined by institutions that evaluate whether their distress deserves treatment. These governing institutions can be offline, such as the American healthcare system, and can also be online, such as online social platforms. As work in HCI and CSCW frames technology-mediated support as one method to fill structural gaps in care, in this study, we ask the question: how do online and offline institutions influence how people in resource-scarce areas come to understand and express their distress online? We situate our work in U.S. Mental Health Professional Shortage Areas (MHPSAs), or areas in which there are too few mental health professionals to meet expected need. We use an analysis of illness narratives to answer this question, conducting a large scale linguistic analysis of social media posts to understand broader trends in expressions of distress online. We then build on these analyses via in-depth interviews with 18 participants with lived experience of mental illness, and analyze the role of online and offline institutions how participants express distress online. Through our findings, we argue that a consideration of institutions is crucial in designing effective technology-mediated support, and discuss the implications of considering institutions in mental health support for platform designers.

Older Adults

Privacy vs. Awareness: Relieving the Tension between Older Adults and Adult Children When Sharing In-home Activity Data

Jiachen Li, Bingrui Zong, Tingyu Cheng, Yunzhi Li, Elizabeth Mynatt, Ashutosh Dhekne

While aging adults frequently prefer to “age in place”, their children can worry about their well-being, especially when they live at a distance. Many in-home systems are designed to monitor the real-time status of seniors at home and provide information to their adult children. However, we observed that the needs and concerns of both sides in the information sharing process are often not aligned. In this research, we examined the design of a system that mitigates the privacy needs of aging adults in light of the information desires of adult children. We apply an iterative process to design and evaluate a visualization of indoor location data and compare its benefits to displaying raw video from cameras. We elaborate on the tradeoffs surrounding privacy and awareness made by older adults and their children, and synthesize design criteria for designing a visualization system to manage these tensions and tradeoffs.

Online Content and Algorithms

Sociotechnical Audits: Broadening the Algorithm Auditing Lens to Investigate Targeted Advertising

Michelle Lam, Ayush Pandit, Colin Kalicki, Rachit Gupta, Poonam Sahoo, Danaë Metaxa

Algorithm audits are powerful tools for studying black-box systems without direct knowledge of their inner workings. While very effective in examining technical components, the method stops short of a sociotechnical frame, which would also consider users themselves as an integral and dynamic part of the system. Addressing this limitation, we propose the concept of sociotechnical auditing: auditing methods that evaluate algorithmic systems at the sociotechnical level, focusing on the interplay between algorithms and users as each impacts the other. Just as algorithm audits probe an algorithm with varied inputs and observe outputs, a sociotechnical audit (STA) additionally probes users, exposing them to different algorithmic behavior and measuring their resulting attitudes and behaviors. As an example of this method, we develop Intervenr, a platform for conducting browser-based, longitudinal sociotechnical audits with consenting, compensated participants. Intervenr investigates the algorithmic content users encounter online, and also coordinates systematic client-side interventions to understand how users change in response. As a case study, we deploy Intervenr in a two-week sociotechnical audit of online advertising (N=244) to investigate the central premise that personalized ad targeting is more effective on users. In the first week, we observe and collect all browser ads delivered to users, and in the second, we deploy an ablation-style intervention that disrupts normal targeting by randomly pairing participants and swapping all their ads. We collect user-oriented metrics (self-reported ad interest and feeling of representation) and advertiser-oriented metrics (ad views, clicks, and recognition) throughout, along with a total of over 500,000 ads. Our STA finds that targeted ads indeed perform better with users, but also that users begin to acclimate to different ads in only a week, casting doubt on the primacy of personalized ad targeting given the impact of repeated exposures. In comparison with other evaluation methods that only study technical components, or only experiment on users, sociotechnical audits evaluate sociotechnical systems through the interplay of their technical and human components.

Privacy

Privacy Legislation as Business Risks: How GDPR and CCPA are Represented in Technology Companies’ Investment Risk Disclosures

Richmond Wong, Andrew Chong, R. Cooper Aspegren

Power exercised by large technology companies has led to concerns over privacy and data protection, evidenced by the passage of legislation including the EU’s General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA). While much privacy research has focused on how users perceive privacy and interact with companies, we focus on how privacy legislation is discussed among a different set of relationships—those between companies and investors. This paper investigates how companies translate the GDPR and CCPA into business risks in documents created for investors. We conduct a qualitative document analysis of annual regulatory filings (Form 10-K) from nine major technology companies. We outline five ways that technology companies consider GDPR and CCPA as business risks, describing both direct and indirect ways that the legislation may affect their businesses. We highlight how these findings are relevant for the broader CSCW and privacy research communities in research, design, and practice. Creating meaningful privacy changes within existing institutional structures requires some understanding of the dynamics of these companies’ decision-making processes and the role of capital.

Race and Bias

Honestly, I think TikTok has a Vendetta Against Black Creators: Understanding Black Content Creator Experiences on TikTok

Camille Harris, Amber Johnson, Sadie Palmer, Diyi Yang, Amy Bruckman

As video-sharing social-media platforms have increased in popularity, a ‘creator economy’ has emerged in which platform users make online content to share with wide audiences, often for profit. As the creator economy has risen in popularity, so have concerns of racism and discrimination on social media. Black content creators across multiple platforms have identified challenges with racism and discrimination, perpetuated by platform users, companies that collaborate with creators for sponsored content, and the algorithms governing these platforms. In this work, we provide a qualitative study of the experiences of Black content creators on one video-sharing platform, TikTok. We conduct 12 semi-structured interviews with Black TikTok content creators to understand their experiences, identify the challenges they face, and understand their perceptions of the platform. We find that some common challenges include: content moderation, monetization, harassment and bullying from viewers, lack of transparency of recommendation and filtering algorithms, and the perception that content from Black creators is treated unfairly by those algorithms. We then suggest design interventions to mitigate the challenges, bolster positive aspects, and overall cultivate an inclusive algorithmic experience for Black creators on TikTok.

Safety

Getting Meta: A Multimodal Approach for Detecting Unsafe Conversations within Instagram Direct Messages of Youth

Shiza Ali, Afsaneh Razi, Ashwaq Alsoubai, Seunghyun Kim, Chen Ling, Munmun De Choudhury, Pamela Wisniewski, Gianluca Stringhini

Instagram, one of the most popular social media platforms among youth, has recently come under scrutiny for potentially being harmful to the safety and well-being of our younger generations. Automated approaches for risk detection may be one way to help mitigate some of these risks if such algorithms are both accurate and contextual to the types of online harms youth face on social media platforms. However, the imminent switch by Instagram to end-to-end encryption will limit the type of data that will be available to the platform to detect and mitigate such risks. In this paper, we investigate which indicators are most helpful in automatically detecting risk in Instagram private conversations, with an eye on high-level metadata, which will still be available in the scenario of end-to-end encryption. Toward this end, we collected Instagram data from 172 youth (ages 13-21) and asked them to identify private message conversations that made them feel uncomfortable or unsafe. Our participants risk-flagged 28,725 conversations that contained 4,181,970 direct messages, including textual posts and images. Based on this rich and multimodal dataset, we tested multiple feature sets (metadata, linguistic cues, and image features) and trained classifiers to detect risky conversations. Overall, we found that the metadata features (e.g., conversation length, a proxy for participant engagement) were the best predictors of risky conversations. However, for distinguishing between risk types, the different linguistic and media cues were the best predictors. Based on our findings, we provide design implications for AI risk detection systems in the presence of end-to-end encryption. More broadly, our work contributes to the literature on adolescent online safety by moving toward more robust solutions for risk detection that directly takes into account the lived risk experiences of youth.

Sliding into My DMs: Detecting Uncomfortable or Unsafe Sexual Risk Experiences within Instagram Direct Messages Grounded in the Perspective of Youth

Afsaneh Razi, Seunghyun Kim, Ashwaq Alsoubai, Shiza Ali, Gianluca Stringhini, Munmun De Choudhury, Pamela Wisniewski

We collected Instagram data from 150 adolescents (ages 13-21) that included 15,547 private message conversations of which 326 conversations were flagged as sexually risky by participants. Based on this data, we leveraged a human-centered machine learning approach to create sexual risk detection classifiers for youth social media conversations. Our Convolutional Neural Network (CNN) and Random Forest models outperformed in identifying sexual risks at the conversation-level (AUC=0.88), and CNN outperformed at the message-level (AUC=0.85). We also trained classifiers to detect the severity risk level (i.e., safe, low, medium-high) of a given message with CNN outperforming other models (AUC=0.88). A feature analysis yielded deeper insights into patterns found within sexually safe versus unsafe conversations. We found that contextual features (e.g., age, gender, and relationship type) and Linguistic Inquiry and Word Count (LIWC) contributed the most for accurately detecting sexual conversations that made youth feel uncomfortable or unsafe. Our analysis provides insights into the important factors and contextual features that enhance automated detection of sexual risks within youths’ private conversations. As such, we make valuable contributions to the computational risk detection and adolescent online safety literature through our human-centered approach of collecting and ground truth coding private social media conversations of youth for the purpose of risk classification.

Social Connections

Alone and Together: Resilience in a Fluid Socio-Technical-Natural System

Beatriz Palacios Abad, Michael Koohang, Morgan Vigil-Hayes, Ellen Zegura

Disruption to routines is an increasingly common part of everyday life. With the roots of some disruptions in the interconnectedness of the world and environmental and socio-political instability, there is good reason to believe that conditions that cause widespread disruption will persist. Individuals, communities, and systems are thus challenged to engage in resilience practices to deal with both acute and chronic disruption. Our interest is in chronic, everyday resilience, and the role of both technology and non-technical adaptation practices engaged by individuals and communities, with a specific focus on practices centered in nature. Foregrounding nature’s role allows close examination of environmental adversity and nature as part of adaptivity. We add to the CSCW and HCI literature on resilience by examining long-distance hikers, for whom both the sources of adversity and the mitigating resilience processes cut across the social, the technical, and the environmental. In interviews with 12 long-distance hikers we find resilience practices that draw upon technology, writ large, and nature in novel assemblages, and leverage fluid configurations of the individual and the community. We place our findings in the context of a definition for resilience that emphasizes a systems view at multiple scales of social organization. We make three primary contributions: (1) we contribute an empirical account of resilience in a contextual setting that complements prior CSCW resilience studies, (2) we add nuance to existing models for resilience to reflect the role of technology as both a resilience tool and a source of adversity, and (3) we identify the need for new designs that integrate nature into systems as a way to foster collaborative resilience. This nuanced understanding of the role of technology in individual and community resilience in and with nature provides direction for technology design that may be useful for everyday disrupted life

Social Support

Evaluating Similarity Variables for Peer Matching in Digital Health Storytelling

Herman Saksono, Vivien Morris, Andrea Parker, Krzysztof Gajos

Peer matching can enhance the impact of social health technologies. By matching similar peers, online health communities can optimally facilitate social modeling that supports positive health attitudes and moods. However, little work has examined how to operationalize similarities in digital health tools, thus limiting our ability to perform optimal peer matching. To address this gap, we conducted a factorial experiment to examine how three categories of similarity variables (i.e., Demographic, Ability, Experiential) can be used to perform peer matching that supports the social modeling of physical activity. We focus this study on physical activity because it is a health behavior that reduces the risk of chronic diseases. We also prioritized this study for single-caregiver mothers who often face substantial barriers to being active because of immense employment and household responsibilities, especially Black single-caregiver mothers. We recruited 309 single-caregiver mothers (49% Black, 51% white), then we asked them to listen to peer audio storytelling about family physical activity. We randomly matched/mismatched the storyteller’s profile using the three categories of similarity variables. Our analyses demonstrated that matching by Demographic variables led to a significantly higher Physical Activity Intention. Furthermore, our subgroup analyses indicated that Black single-caregiver mothers experienced a significant and immediate effect of peer matching in Physical Activity Intention, Self-efficacy, and mood. In contrast, white single-caregiver mothers did not report any significant immediate effect. Collectively, our data suggest that peer matching in health storytelling is potentially beneficial for racially minoritized groups; and that having diverse representations in health technology is required for promoting health equity.

Teaching and Learning

Building Causal Agency in Autistic Students through Iterative Reflection in Collaborative Transition Planning

Rachel Lowy, Chung Eun Lee, Gregory Abowd, Jennifer Kim

Transition planning is a collaborative process to promote agency in students with disabilities by encouraging them to participate in setting their own goals with team members and learn ways to assess their progress towards the goals. For autistic young adults who experience a lower employment rate, less stability in employment, and lower community connections than those with other disabilities, successful transition planning is an important opportunity to develop agency towards preparing and attaining success in employment and other areas meaningful to them. However, a failure of consistent information sharing among team members and opportunities for agency in students has prevented successful transition planning for autistic students. Therefore, this work brings causal agency theory and the collaborative reflection framework together to uncover ways transition teams can develop students’ agency by collaboratively reflecting on students’ inputs related to transition goals and progress. By interviewing autistic students, parents of autistic students, and professionals who were involved in transition planning, we uncovered that teams can better support student agency by accommodating their needs and encouraging their input in annual meetings, building relationships through transparent and frequent communication about day-to-day activities, centering goals on student’s interests, and supporting student’s skill-building in areas related to their transition goals. However, we found that many teams were not enacting these practices, leading to frustration and negative outcomes for young adults. Based on our findings, we propose a role for autistic students in the collaborative reflection framework that encouraged participation and builds causal agency. We also make design recommendations to encourage autistic students’ participation in collaborative reflection around long-term and short-term needs in ways that promote their causal agency.

Big Picture

Explore an international view of the CSCW 2023 paper authors by session. Pick a country ⚫ to see its authors by session and/or select an organization to see its authors. Note: Organizations are listed as they are in the program, so duplicates may occur.

Development: College of Computing

Project and Web Lead: Josh Preston

Data Source: CSCW

Additional Data Collection: Joni Isbell, Nadia Muhammad

Data Graphics: Josh Preston