ICRA 2024

IEEE International Conference on Robotics and Automation | May 13 – 17

Robotics research is part of the next wave of computing innovation and is key to shaping how artificial intelligence will become part of our lives. Meet the Georgia Tech experts who are charting a path forward. #ICRA2024

The IEEE International Conference on Robotics and Automation is a premier global venue for robotics research. Georgia Tech is a top contributor to the technical program. Discover the people who are leading robotics research in the era of artificial intelligence.

Georgia Tech at ICRA 2024

Explore Georgia Tech’s experts and the organizations they are working with at ICRA.

By the Numbers

Partner Organizations

Boston Dynamic AI Institute • Bowery Farming • California Institute of Technology • Carnegie Mellon University • Concordia University • Emory University • ETH Zurich • Georgia Tech • Google • Hello Robot • Honda Research Institute, USA • Intel • Korea Advanced Institute of Science and Technology • Long School of Medicine • Meta • Massachusetts Institute of Technology • Morehouse College • Motional • Parkinson’s Foundation • Politecnico Di Milano • Sandia National Laboratories • SkyMul • Southern University of Science and Technology • Stanford University • Toyota Research Institute • UC Berkeley • UC San Diego • University of Copenhagen • University of Maryland, College Park • University of Michigan • University of Modena and Reggio Emilia • University of North Carolina, Charlotte • University of Toronto • University of Washington • ZOOX

Sessions with Georgia Tech

- Aerial Systems: Perception and Autonomy III

- AI-Enabled Robotics and Learning

- AI-Enabled Robotics II

- Award Session, CC-301 | Cognitive Robotics

- Bioinspired Robot Abilities

- Collision Avoidance II

- Continual Learning

- Deep Learning I

- Distributed Robot Systems

- Intelligent Transportation Systems IV

- Legged Robots and Learning II

- Legged Robots I

- Legged Robots IV

- Localization VI

- Localization VII

- Machine Learning for Robot Control II

- Manipulation Planning

- Motion and Path Planning II

- Multi-Modal Perception for HRI I

- Object Detection III

- Optimization and Optimal Control I

- Optimization and Optimal Control II

- Optimization and Optimal Control III

- Path Planning for Multiple Mobile Robots or Agents I

- Performance Evaluation and Benchmarking

- Planning under Uncertainty I

- Planning under Uncertainty II

- Rehabilitation Robotics

- Representation Learning II

- Semantic Scene Understanding I

- Soft Robot Applications II

- Surgical Robotics II

- Swarm Robotics

- Visual Learning II

Faculty

Best Paper Finalist Paper

The Big Picture

Georgia Tech’s 38 papers in the technical program include one best paper award finalist, from the School of Interactive Computing, with faculty contributions led by the College of Computing. Among the institute’s colleges, half of the faculty come from computing (12), with the other half from engineering and the sciences. The School of Interactive Computing has the most faculty experts, with 10.

Search for people and organizations in the chart below. The first column shows Georgia Tech-led teams. Each row is an entire team, with the label showing the first author’s name. Explore more now.

Explore Research

Georgia Tech faculty and students are participating across the ICRA technical program. Explore their latest robotics work and results during the week starting May 12. Total contributions to the papers program includes 38 papers, with one best paper award finalist.

Best Paper Finalist

Winner will be announced at ICRA in Yokohama

Cognitive Robotics Session

Vision-Language Frontier Maps for Zero-Shot Semantic Navigation

Naoki Yokoyama, Sehoon Ha, Dhruv Batra, Jiuguang Wang, Bernadette Bucher

Understanding how humans leverage semantic knowledge to navigate unfamiliar environments and decide where to explore next is pivotal for developing robots capable of human-like search behaviors. We introduce a zero-shot navigation approach, Vision-Language Frontier Maps (VLFM), which is inspired by human reasoning and designed to navigate towards unseen semantic objects in novel environments. VLFM builds occupancy maps from depth observations to identify frontiers, and leverages RGB observations and a pre-trained vision-language model to generate a language-grounded value map. VLFM then uses this map to identify the most promising frontier to explore for finding an instance of a given target object category. We evaluate VLFM in photo-realistic environments from the Gibson, Habitat-Matterport 3D (HM3D), and Matterport 3D (MP3D) datasets within the Habitat simulator. Remarkably, VLFM achieves state-of-the-art results on all three datasets as measured by success weighted by path length (SPL) for the Object Goal Navigation task. Furthermore, we show that VLFM’s zero-shot nature enables it to be readily deployed on real-world robots such as the Boston Dynamics Spot mobile manipulation platform. We deploy VLFM on Spot and demonstrate its capability to efficiently navigate to target objects within an office building in the real world, without any prior knowledge of the environment. The accomplishments of VLFM underscore the promising potential of vision-language models in advancing the field of semantic navigation. Videos of real world deployment can be viewed at naoki.io/vlfm.

Featured Research

DeepSee — Visualization System for Analyzing Oceanographic Data

By Nathan Deen

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua.

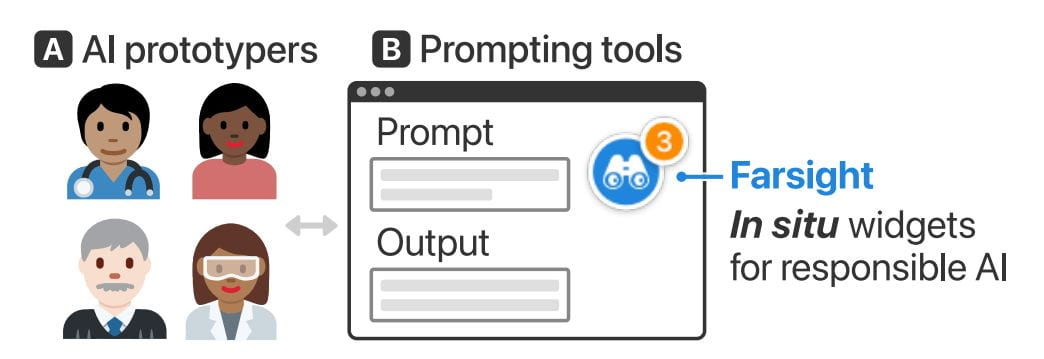

Farsight is a New Tool that Teaches Responsible AI Practices for Large Language Models 🔗

LLMs have empowered millions of people with diverse backgrounds, including writers, doctors, and educators, to build and prototype powerful AI apps through prompting. However, many of these AI prototypers don’t have training in computer science, let alone responsible AI practices.

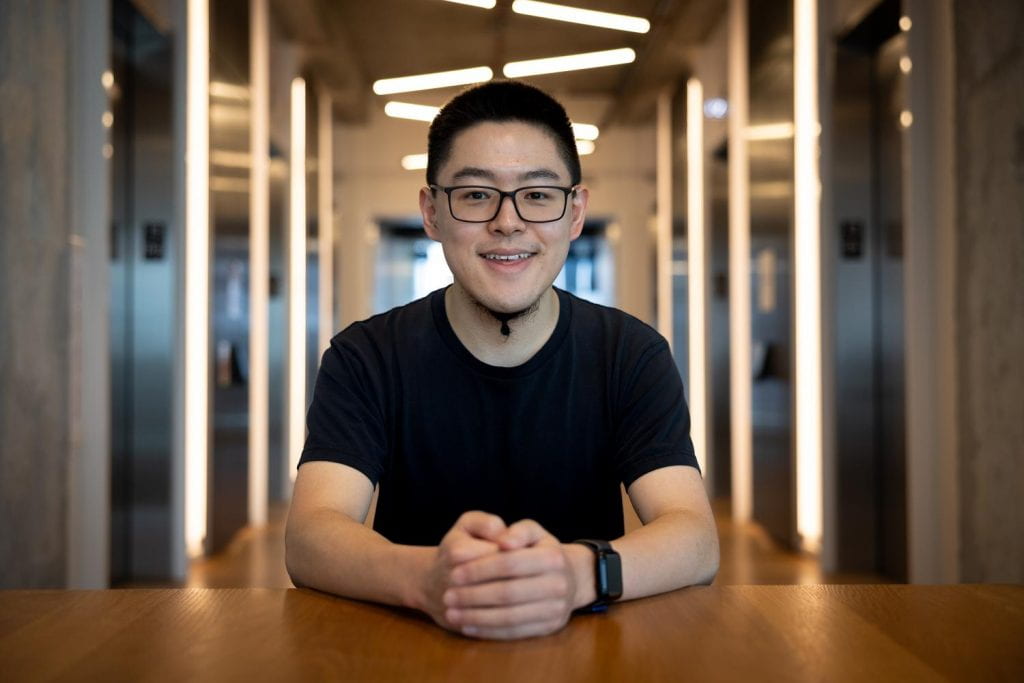

Jay Wang, PhD student in Machine Learning

By Bryant Wine

Thanks to a Georgia Tech researcher’s new tool, application developers can now see potential harmful attributes in their prototypes.

Farsight is a tool designed for developers who use large language models (LLMs) to create applications powered by artificial intelligence (AI). Farsight alerts prototypers when they write LLM prompts that could be harmful and misused.

Downstream users can expect to benefit from better quality and safer products made with Farsight’s assistance. The tool’s lasting impact, though, is that it fosters responsible AI awareness by coaching developers on the proper use of LLMs.

Machine Learning Ph.D. candidate Zijie (Jay) Wang is Farsight’s lead architect. He will present the paper at the upcoming Conference on Human Factors in Computing Systems (CHI 2024). Farsight ranked in the top 5% of papers accepted to CHI 2024, earning it an honorable mention for the conference’s best paper award.

READ MORE

“LLMs have empowered millions of people with diverse backgrounds, including writers, doctors, and educators, to build and prototype powerful AI apps through prompting. However, many of these AI prototypers don’t have training in computer science, let alone responsible AI practices,” said Wang.

“With a growing number of AI incidents related to LLMs, it is critical to make developers aware of the potential harms associated with their AI applications.”

Wang referenced an example when two lawyers used ChatGPT to write a legal brief. A U.S. judge sanctioned the lawyers because their submitted brief contained six fictitious case citations that the LLM fabricated.

With Farsight, the group aims to improve developers’ awareness of responsible AI use. It achieves this by highlighting potential use cases, affected stakeholders, and possible harm associated with an application in the early prototyping stage.

A user study involving 42 prototypers showed that developers could better identify potential harms associated with their prompts after using Farsight. The users also found the tool more helpful and usable than existing resources.

Feedback from the study showed Farsight encouraged developers to focus on end-users and think beyond immediate harmful outcomes.

“While resources, like workshops and online videos, exist to help AI prototypers, they are often seen as tedious, and most people lack the incentive and time to use them,” said Wang.

“Our approach was to consolidate and display responsible AI resources in the same space where AI prototypers write prompts. In addition, we leverage AI to highlight relevant real-life incidents and guide users to potential harms based on their prompts.”

Farsight employs an in-situ user interface to show developers the potential negative consequences of their applications during prototyping.

Alert symbols for “neutral,” “caution,” and “warning” notify users when prompts require more attention. When a user clicks the alert symbol, an awareness sidebar expands from one side of the screen.

The sidebar shows an incident panel with actual news headlines from incidents relevant to the harmful prompt. The sidebar also has a use-case panel that helps developers imagine how different groups of people can use their applications in varying contexts.

Another key feature is the harm envisioner. This functionality takes a user’s prompt as input and assists them in envisioning potential harmful outcomes. The prompt branches into an interactive node tree that lists use cases, stakeholders, and harms, like “societal harm,” “allocative harm,” “interpersonal harm,” and more.

The novel design and insightful findings from the user study resulted in Farsight’s acceptance for presentation at CHI 2024.

CHI is considered the most prestigious conference for human-computer interaction and one of the top-ranked conferences in computer science.

CHI is affiliated with the Association for Computing Machinery. The conference takes place May 11-16 in Honolulu.

Wang worked on Farsight in Summer 2023 while interning at Google + AI Research group (PAIR).

Farsight’s co-authors from Google PAIR include Chinmay Kulkarni, Lauren Wilcox, Michael Terry, and Michael Madaio. The group possesses closer ties to Georgia Tech than just through Wang.

Terry, the current co-leader of Google PAIR, earned his Ph.D. in human-computer interaction from Georgia Tech in 2005. Madaio graduated from Tech in 2015 with a M.S. in digital media. Wilcox was a full-time faculty member in the School of Interactive Computing from 2013 to 2021 and serves in an adjunct capacity today.

Though not an author, one of Wang’s influences is his advisor, Polo Chau. Chau is an associate professor in the School of Computational Science and Engineering. His group specializes in data science, human-centered AI, and visualization research for social good.

“I think what makes Farsight interesting is its unique in-workflow and human-AI collaborative approach,” said Wang.

“Furthermore, Farsight leverages LLMs to expand prototypers’ creativity and brainstorm a wide range of use cases, stakeholders, and potential harms.”

New Research Embodies Queer History Through Artifacts 🔗

A research team in the Ivan Allen College of Liberal Arts has expanded its Queer HCI scholarship, using queer theory to inform the design of wearable experiences that explore archives of gender and sexuality. The project, “Button Portraits,” invites individuals to listen to oral histories from prominent queer activists by pinning archival buttons to a wearable audio player, eliciting moving personal impressions.

The researchers observed 17 participants’ experiences with “Button Portraits,” and with semi-structured interviews, surfaced reflections on how the design evoked personal connections to history, queer self-identification, and relatability to archival materials.

*the original story reported on an earlier version of the research project

“Button Portraits” is about using technology to create tangible connections to LGBTQ+ history that help people connect across generations and reflect on shared queer experiences.

Alexandra Teixeira Riggs, PhD student in Digital Media

Georgia Tech Partners with Children’s Hospital on New Heart Surgery Planning Tool 🔗

By Bryant Wine

Cardiologists and surgeons could soon have a new mobile augmented reality (AR) tool to improve collaboration in surgical planning.

ARCollab is an iOS AR application designed for doctors to interact with patient-specific 3D heart models in a shared environment. It is the first surgical planning tool that uses multi-user mobile AR in iOS.

The application’s collaborative feature overcomes limitations in traditional surgical modeling and planning methods. This offers patients better, personalized care from doctors who plan and collaborate with the tool.

Georgia Tech researchers partnered with Children’s Healthcare of Atlanta (CHOA) in ARCollab’s development. Pratham Mehta, a computer science major, led the group’s research.

READ MORE

“We have conducted two trips to CHOA for usability evaluations with cardiologists and surgeons. The overall feedback from ARCollab users has been positive,” Mehta said.

“They all enjoyed experimenting with it and collaborating with other users. They also felt like it had the potential to be useful in surgical planning.”

ARCollab’s collaborative environment is the tool’s most novel feature. It allows surgical teams to study and plan together in a virtual workspace, regardless of location.

ARCollab supports a toolbox of features for doctors to inspect and interact with their patients’ AR heart models. With a few finger gestures, users can scale and rotate, “slice” into the model, and modify a slicing plane to view omnidirectional cross-sections of the heart.

Developing ARCollab on iOS works twofold. This streamlines deployment and accessibility by making it available on the iOS App Store and Apple devices. Building ARCollab on Apple’s peer-to-peer network framework ensures the functionality of the AR components. It also lessens the learning curve, especially for experienced AR users.

ARCollab overcomes traditional surgical planning practices of using physical heart models. Producing physical models is time-consuming, resource-intensive, and irreversible compared to digital models. It is also difficult for surgical teams to plan together since they are limited to studying a single physical model.

Digital and AR modeling is growing as an alternative to physical models. CardiacAR is one such tool the group has already created.

However, digital platforms lack multi-user features essential for surgical teams to collaborate during planning. ARCollab’s multi-user workspace progresses the technology’s potential as a mass replacement for physical modeling.

“Over the past year and a half, we have been working on incorporating collaboration into our prior work with CaridacAR,” Mehta said.

“This involved completely changing the codebase, rebuilding the entire app and its features from the ground up in a newer AR framework that was better suited for collaboration and future development.”

Its interactive and visualization features, along with its novelty and innovation, led the Conference on Human Factors in Computing Systems (CHI 2024) to accept ARCollab for presentation. The conference occurs May 11-16 in Honolulu.

CHI is considered the most prestigious conference for human-computer interaction and one of the top-ranked conferences in computer science.

M.S. student Harsha Karanth and alumnus Alex Yang (CS 2022, M.S. CS 2023) co-authored the paper with Mehta. They study under Polo Chau, an associate professor in the School of Computational Science and Engineering.

The Georgia Tech group partnered with Timothy Slesnick and Fawwaz Shaw from CHOA on ARCollab’s development.

“Working with the doctors and having them test out versions of our application and give us feedback has been the most important part of the collaboration with CHOA,” Mehta said.

“These medical professionals are experts in their field. We want to make sure to have features that they want and need, and that would make their job easier.”

This surgical planning technology—developed for iOS and powered by augmented reality—is a game changer. It offers innovative ways for surgeons and cardiologists to collaborate and plan complex surgeries.

Duen Horng “Polo” Chau, Assoc. Professor in Computational Science and Engineering

LIVE UPDATES

See you in Yokohama!

Development: College of Computing, Institute for Robotics and Intelligent Machines (IRIM)

Project Lead/Data Graphics: Joshua Preston

Data Management: Christa Ernst, Joni Isbell

Live Event Updates: Christa Ernst