Georgia Tech @ HRI 2023

Human-robot interaction (HRI) is here. No longer confined to manufacturing floors or science fiction, robots are entering society in various ways. And powering this robot revolution is artificial intelligence and the opportunities it affords researchers and technologists in creating new innovations.

Georgia Tech researchers will present their latest advancements in HRI March 13-16 in Stockholm where leading roboticists will meet at the 18th Annual ACM/IEEE International Conference on Human-Robot Interaction (HRI).

Explore now Georgia Tech’s people and progress in AI advancements for robotics and see where the future might lead. 🧑🤖

Collaborative Bots

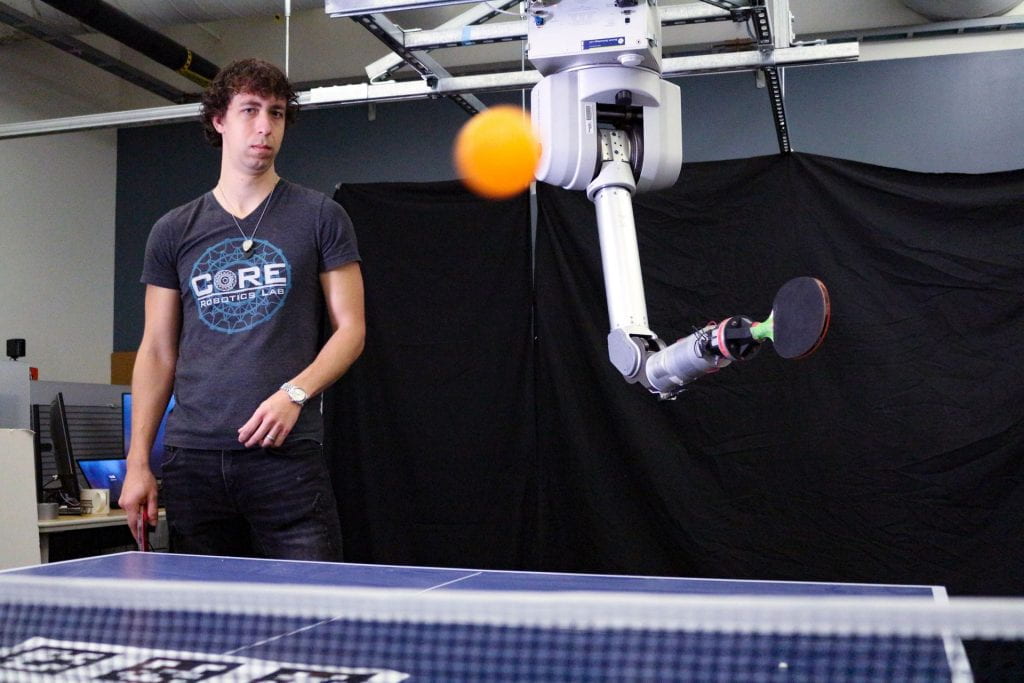

As high-speed, agile robots become more commonplace, these robots will have the potential to better aid and collaborate with humans. In research accepted at HRI, Matthew Gombolay, director of the Core Robotics Lab at Georgia Tech, aims to enable the deployment of safe and trustworthy agile robots that operate in proximity with humans.

Photo by Christa Ernst

RESEARCHERS

Ph.D. Student

Assoc. Professor

Interactive Computing

Asst. Professor

Interactive Computing

Asst. Professor

Interactive Computing

Robotics Ph.D. Student

CS Ph.D. Student

Graduate Student

MS Robotics Student

Professor

Business

CS Ph.D. Student

MSCS ’22

Robotics PhD Student

Robotics Ph.D.

Candidate

Robotics Ph.D. Student

CS Ph.D. Student

Robotics Ph.D. Student

Professor

Interactive Computing

BS CS Student

Robotics Ph.D. Student

Main Program

Impacts of Robot Learning on User Attitude and Behavior

Nina Moorman (Georgia Institute of Technology), Erin Hedlund-Botti (Georgia Institute of Technology), Mariah Schrum (Georgia Institute of Technology), Manisha Natarajan (Georgia Institute of Technology), Matthew Gombolay (Georgia Institute of Technology)

The Effect of Robot Skill Level and Communication in Rapid, Proximate Human-Robot Collaboration

Kin Man Lee (Georgia Institute of Technology), Arjun Krishna (Georgia Institute of Technology), Zulfiqar Zaidi (Georgia Institute of Technology), Rohan Paleja (Georgia Institute of Technology), Letian Chen (Georgia Institute of Technology), Erin Hedlund-Botti (Georgia Institute of Technology), Mariah Schrum (Georgia Institute of Technology), Matthew Gombolay (Georgia Institute of Technology)

Pioneers

Longitudinal Proactive Robot Assistance

Maithili Patel (Georgia Institute of Technology)

Investigating Learning from Demonstration in Imperfect and Real World Scenarios

Erin Hedlund (Georgia Institute of Technology), Matthew Gombolay (Georgia Institute of Technology)

Workshops

Semantic Scene Understanding for Human-Robot Interaction

Maithili Patel (Georgia Institute of Technology), Fethiye Irmak Dogan (KTH Royal Institute of Technology), Zhen Zeng (J.P.Morgan AI Research), Kim Baraka (Vrije Universiteit (VU) Amsterdam), Sonia Chernova (Georgia Institute of Technology)

Late-Breaking Work

TEAM 3 Challenge: Tasks for Multi-Human and Multi-Robot Collaboration with Voice and Gestures

Michael Munje (Georgia Institute of Technology), Lylybell Teran (Columbia University), Bradon Thymes (Cornell University), Joseph Salisbury (Riverside Research)

How to Train Your Guide Dog: Wayfinding and Safe Navigation with Human-Robot Modeling

Joanne Kim (Georgia Institute of Technology), Wenhao Yu (Google LLC), Jie Tan (Google), Greg Turk (Georgia Institute of Technology), Sehoon Ha (Georgia Institute of Technology)

Robotic Interventions for Learning (ROB-I-LEARN): Examining Social Robotics for Learning Disabilities through Business Model Canvas

Anshu Arora (University of the District of Columbia), Amit Arora (University of the District of Columbia), K. Sivakumar (Lehigh University), John R. McIntyre (Georgia Institute of Technology)

Lying About Lying: Examining Trust Repair Strategies After Robot Deception in a High-Stakes HRI Scenario

Kantwon Rogers (Georgia Institute of Technology), Reiden Webber (Georgia Institute of Technology), Ayanna Howard (The Ohio State University)

Examining Trust Repair Strategies After Robot Deception in a High-Stakes Scenario

Robots can lie. But building back trust with people is also possible.

When the stakes are high—as when a self-driving car gives its occupants pause for concern, or in a future where a spaceship’s automated navigator fibs about details en route to Mars—how do people respond in the situation? Researchers look at what apologies from the robot might work.

From the minds of Kantwon Rogers (pictured right), Reiden Webber (pictured left), and Ayanna Howard.

Photo by Terence Rushin

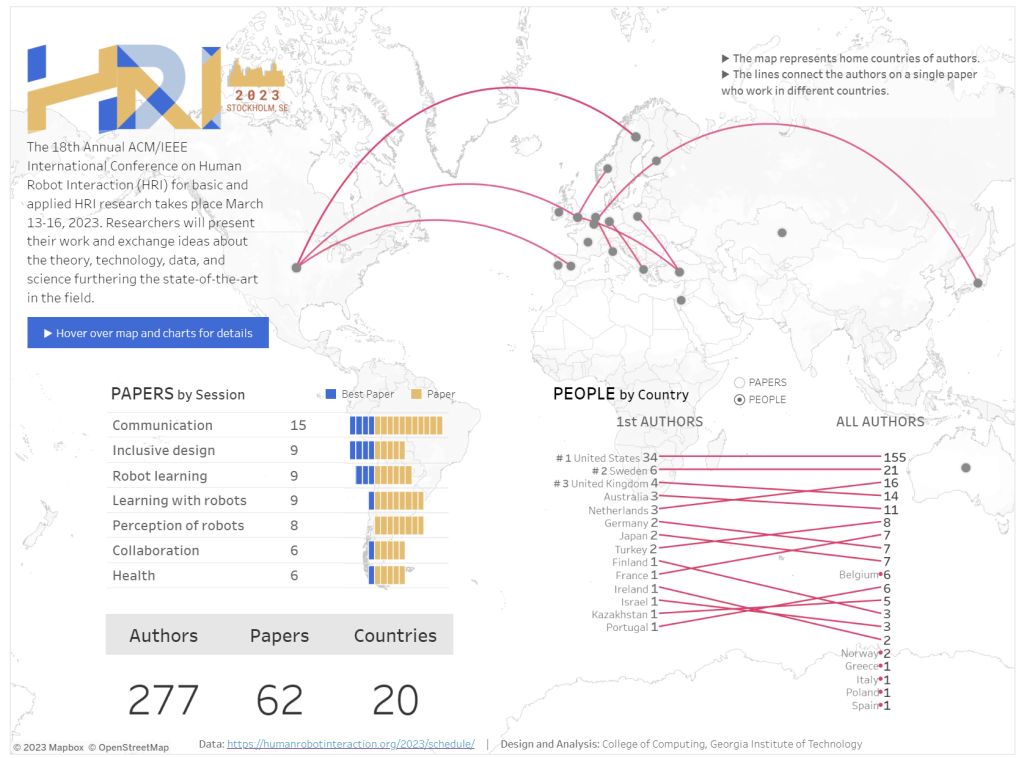

GLOBAL VIEW

This map represents HRI’s main papers program. Georgia Tech has work in the Robot Learning and Collaboration Sessions. Click on the map to go to the interactive version.

Researchers Use Table Tennis to Understand Human-Robot Dynamics in Agile Environments

By Breon Martin

A team of researchers are using the sport of table tennis to demonstrate the potential areas a robot can work closely with human partners to complete tasks. The results also show that humans may not always trust a robot’s explanation of its intended action.

The robot—a Barrett WAM arm equipped with a camera and paddle—was trained through a machine learning process called imitation learning. The researchers developed a system to give the robot positive reinforcement for successful volleys, and negative reinforcement for unsuccessful ones.

Machine Learning Center at Georgia Tech

The Machine Learning Center aims to research and develop innovative and sustainable technologies using machine learning and artificial intelligence (AI) that serve in socially and ethically responsible ways.

The center’s mission is to establish a research community that leverages the Georgia Tech interdisciplinary context, trains the next generation of machine learning and AI pioneers, and is home to current leaders in machine learning and AI.

Institute for Robotics and Intelligent Machines

The Institute for Robotics and Intelligent Machines at Georgia Tech supports and facilitates the operation of several core research facilities on campus allowing faculty, students and collaborators to advance the boundaries of robotics research.

IRIM breaks through disciplinary boundaries and allows for transformative research that transitions from theory to robustly deployed systems featuring next-generation robots. Fundamental research includes expertise in mechanics, control, perception, artificial intelligence and cognition, interaction, and systems.

Web Development: Joshua Preston

Writers: Catherine Barzler, Breon Martin

Photography: Christa Ernst, Terence Rushin

Interactive Graphic: Joshua Preston

Video Clips: Core Robotics Lab