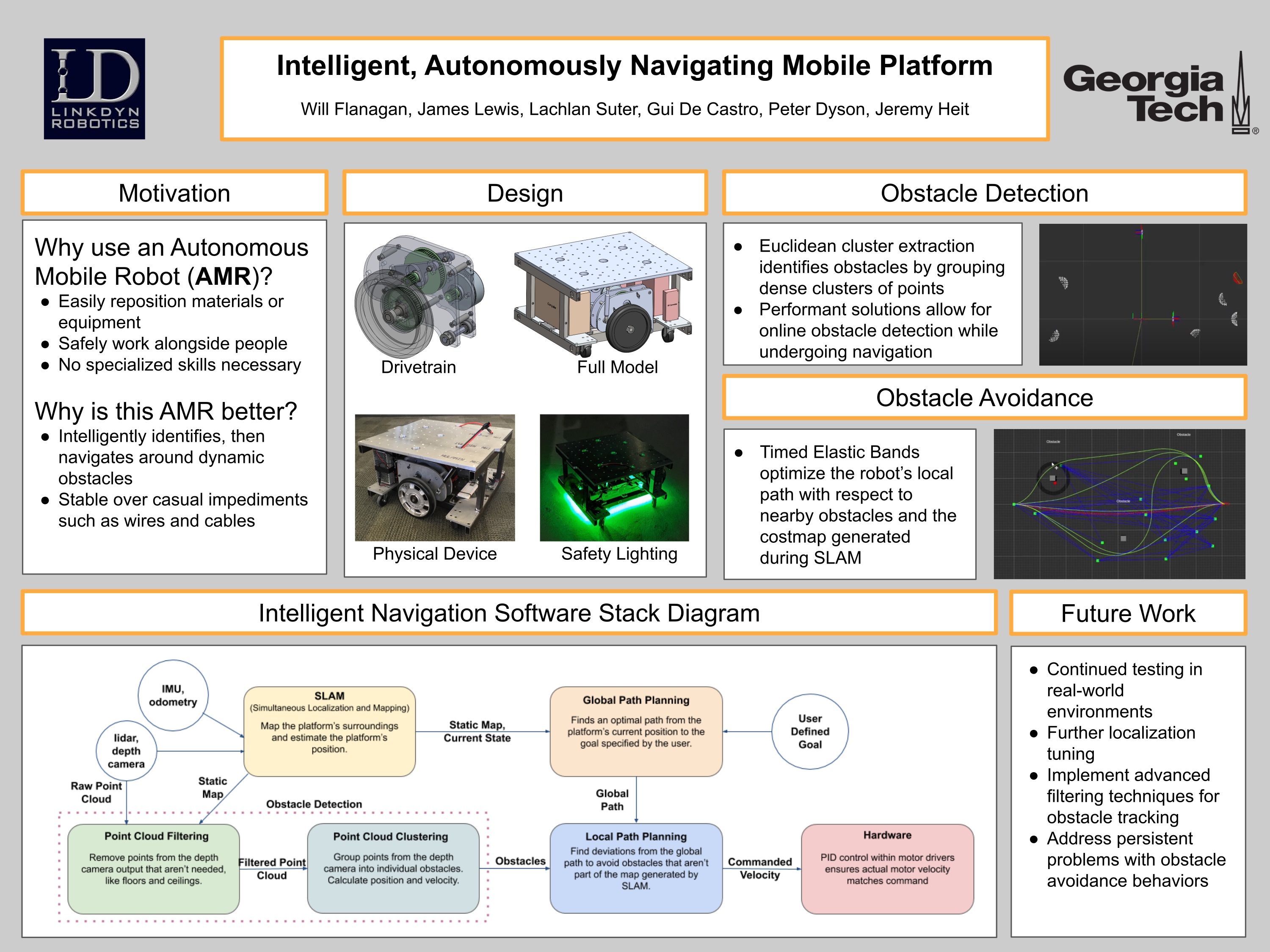

For this Throwback Thursday we interviewed James Lewis, a member of the Helping Hand Team, who supported the creation of the project, “Intelligent Autonomously Navigating Mobile Platform.” With the team’s sponsor, LinkDyn Robotics, they were able to redesign and innovate an older prototype from the company. James was able to present with his group mates, Will Flanagan, Peter Dyson, Lachlan Suter, Jeremy Heit, and Guilherme De Castro, during the Fall 2020 Virtual Expo.

Platform with drivetrains and sensors removed.

Platform with drivetrains and sensors removed.

Platform with all sensors and drivetrains attached.

Platform with all sensors and drivetrains attached.

Q: Could you give us a brief description of your project for those who may not know about it?

A: Our project was to redesign and upgrade a prototype mobile platform from our sponsor, LinkDyn Robotics, to improve the device’s manufacturability and obstacle avoidance. These types of robots are often used in warehouse automation for material handling but may also become common within the hospitality industry. Our final product was a 2-foot by 2-foot by 1-foot tall wheeled robot built around a welded steel frame with modular drivetrains. A depth camera and LIDAR sensor were integrated into the design to map the environment and navigate through it. As part of our deliverables, we manufactured our design and wrote the software to demonstrate autonomous navigation in real-world testing.

Q: Where did you first draw inspiration for the idea of the “Intelligent Autonomously Navigating Mobile Platform,” for your Capstone Design Project?

A: LinkDyn Robotics is the maker of a very advanced force-control robotic arm. However, to better serve their customers they needed a way to move their arms around a workspace for tasks like picking and placing inventory on shelves. One of our team members built the first prototype for LinkDyn over the previous summer as an intern, and from this very unpolished design we identified a variety of potential improvements. A lot of inspiration for the design and sensor choice came from looking at existing mobile robots on the market, while the software design was inspired by open-source projects integrated into ROS (Robot Operating System) and research into low-speed autonomous vehicles.

Q: What was the creation process like and how did you and your teammates come together to finish your project?

A: Both the physical and software components of this project were quite demanding, so we split into two subteams to tackle each aspect in parallel. While half the team was working on the mechanical and electrical design, the other half used a simulation environment consisting of ROS and Gazebo to develop and test the autonomous navigation stack. Each member was typically assigned individual tasks and larger group sessions were used whenever an issue posed a persistent problem. In the final weeks before the expo, we met in person to assemble the mobile platform and test.

Q: What were some of the problems that you faced along the way and how did you overcome those obstacles?

A: Since we were working almost entirely remotely, it was difficult to make sure that everybody had access to the same tools and was able to contribute. The mechanical designs were created using Solidworks and synced between members using a shared Google Drive folder. For the software subteam, each member needed access to the ROS+Gazebo simulation environment, but severe compatibility issues arose. To address this issue, we created a docker image with the full set of simulation and development tools we were using.

Q: How did it feel to present your project virtually during this pandemic?

A: Presenting remotely definitely made it harder to connect with the audience and judges. The expo felt less personal and it was much more difficult to fully convey what our project involved. While we put the mobile platform on camera during the presentation to show that we actually manufactured our device, it would’ve been much easier to demonstrate our device in person.

Q: What do you think made your team successful in creating an autonomous mobile platform?

A: Our team spent a lot of time making sure that the transition from simulation to hardware would be smooth. We only had a week or two to test on actual hardware, so any trouble transitioning could have prevented us from successfully demonstrating our work. The Docker environment and Gazebo were crucial for this since it allowed us to run our full stack in simulation in an almost identical manner to the way we ran it on the physical system.

Q: Do you have any advice for future teams?

A: Issue tracking is a great way to formally assign team members tasks, even if those tasks aren’t software related. It also makes it easier for team members to be aware of what others are working on, leading to a more cohesive effort. The importance of communication can also never be understated.