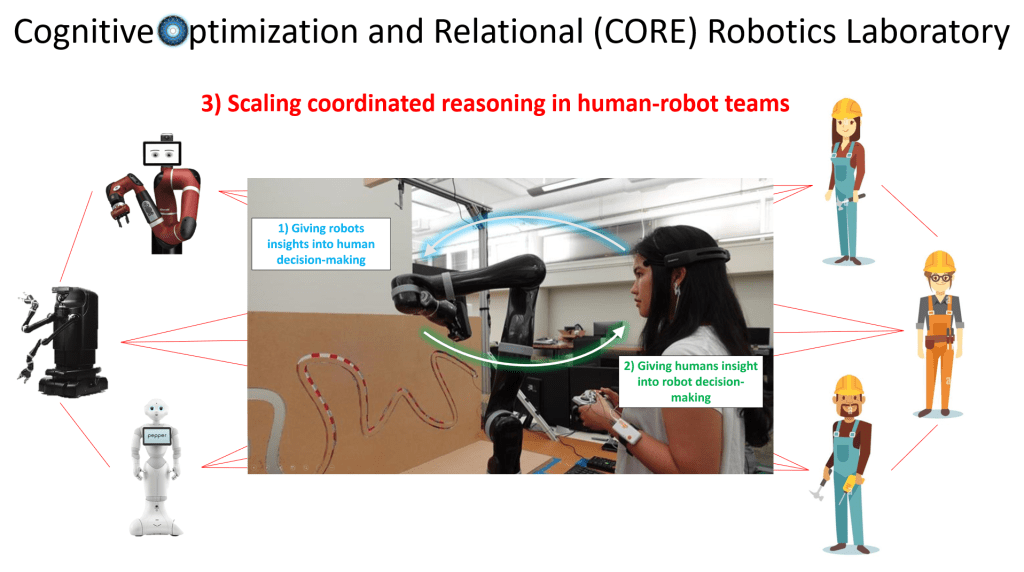

I work to democratize robotic technology – placing the power of robots in the hands of everyone by developing new computational methods and human factors insights that enable robots to 1) learn novel skills via human demonstration from diverse end-users, 2) understand and support the human in human-robot interaction (HRI), and 3) coordinate human-robot teams. I employ human-subjects research methods to inform the formulation of our systems, validate our methods improve HRI across both subjective and objective metrics, and develop design guidelines for researchers and practitioners alike.

My research is inspired by the potential of robots to become an invaluable part of our everyday lives, from autonomous vehicles (AVs) to healthcare, manufacturing, and defense. To reach this potential, however, we must move away from army-of-consultants approaches that yield expensive, ad hoc, solutions and move towards interactive learning techniques, e.g. Learning from Demonstration (LfD), in which robots learn skills (e.g., an AV navigating an obstacle course with user-specific objectives) via human feedback (e.g., robots observing human skill performance). Likewise, robots must support humans through human-centered design techniques, explainable Artificial Intelligence (XAI), and team coordination algorithms.

Research Highlights

1) Learning from Diverse, Imperfect Teachers – I specialize in developing new computational methods that address suboptimality and heterogeneity in LfD. Suboptimality is a natural consequence of humans who operate under bounded rationality and do not understand robot learning methods. Unfortunately, learning from suboptimal humans only reinforces that suboptimality in robot form. Heterogeneity arises from contextual and dispositional factors humans bring to the interaction, resulting in robots struggling to reconcile seemingly contradictory behaviors. Heterogeneous LfD is such a challenge that prior work has either opted to learn from a single human or focused on curating a set of homogeneous demonstrators; neither of these approaches scale.

Some of our recent works highlighting this research include:

Mariah Schrum*, Erin Hedlund-Botti*, Nina Moorman, and Matthew Gombolay (2022) | MIND MELD: Personalized Meta-Learning for Robot-Centric Imitation Learning | In Proc. International Conference on Human-Robot Interaction (HRI). [25% Acceptance Rate] [Best Paper Award]

Letian Chen*, Sravan Jayanthi*, Rohan Paleja, Daniel Martin, Nakul Gopalan, Viacheslav Zakharov, and Matthew Gombolay (2022) | Fast Lifelong Adaptive Inverse Reinforcement Learning from Demonstrations | In Proc. Conference on Robot Learning (CoRL).[39% Acceptance Rate]

Letian Chen, Rohan Paleja, and Matthew Gombolay (2022) | Learning from Suboptimal Demonstration via Self-Supervised Reward Regression | In Proc. Conference on Robot Learning (CoRL). [34% Acceptance Rate] [Plenary Talk] [Best Paper Finalist]

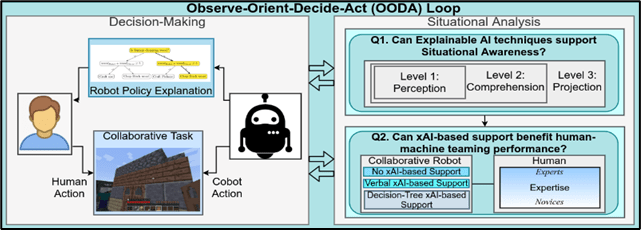

Thrust 2) Supporting the Human in HRI – Robotic systems are often rejected by end-users due to a lack of user trust and system usability. As such, we take a human-centered approach in designing our robots. Across domains, I have demonstrated that we can design robots with human-interpretable decision-making algorithms to regulate trust and improve human-robot team performance. A key focus of my work is on developing and quantifying the benefit of XAI techniques in HRI. We have developed novel XAI methods for both reinforcement learning (RL) and LfD. Uniquely, we have published key studies quantifying the objective and subjective benefits (or detriments) of various XAI techniques in instantaneous and sequential decision-making tasks (e.g., for human-robot teaming). Some of our recent works highlighting this research include:

Andrew Silva, Mariah Schrum, Erin Hedlund-Botti, Nakul Gopalan, and Matthew C. Gombolay (2023) | Explainable Artificial Intelligence: Evaluating the Objective and Subjective Impacts of xAI on Human-Agent Interaction | Published Version | International Journal of Human–Computer Interaction.

Rohan Paleja*, Yaru Niu*, Andrew Silva, Chace Ritchie, Sugju Choi, and Matthew Gombolay (2022) | Learning Interpretable, High-Performing Policies for Autonomous Driving | In Proc. Robotics: Science and Systems (RSS). [32% Acceptance Rate]

Rohan Paleja, Andrew Silva, Letian Chen, and Matthew Gombolay (2020) | Interpretable and Personalized Apprenticeship Scheduling: Learning Interpretable Scheduling Policies from Heterogeneous User Demonstrations | In Proc. Conference on Neural Information Processing Systems (NeurIPS). [20% Acceptance Rate]

Thrust 3) Coordinating Human-Robot Teams – Once robots can learn novel skills from non-roboticist end-users and support appropriate trust through design- (e.g., anthropomorphism) and algorithm-based (e.g., XAI) techniques, robots must now coordinate their activities at scale in human-robot teams. This coordination requires innovations in optimization and human modeling. My lab has pioneered novel learning representations and optimization techniques with graph neural networks (GNNs) for human-robot team coordination. We have also augmented these coordination techniques to account for the human in human-robot teaming (e.g., stochastic and time-varying performance). Finally, we have open-sourced our techniques for decentralized coordination of teams in partially observable environments through our Github repositories (e.g., MAGIC) and through an AAMAS’22 tutorial!

Video depicting our work developing graph neural network architectures and optimization techniques for coordinating robot teams for assembly manufacturing (Wang and Gombolay, RA-L’20).

Some of our recent works highlighting this research include:

Yaru Niu*, Rohan Paleja*, and Matthew Gombolay (2021) | Multi-Agent Graph-Attention Communication and Teaming | In Proc. Autonomous Agents and Multiagent Systems (AAMAS). [25% Acceptance Rate]

Zheyuan Wang, Chen Liu, and Matthew Gombolay (2021) | Heterogeneous Graph Attention Networks for Scalable Multi-Robot Scheduling with Temporospatial Constraints | Autonomous Robots.

Ruisen Liu, Manisha Natarajan, and Matthew Gombolay (2020) | Coordinating Human-Robot Teams with Dynamic and Stochastic Task Proficiencies | ACM Transactions on Human-Robot Interaction (THRI), Volume 11, Issue 1, pages 1-42.

Thrust++) Agile Robotics — We have a growing area of research in our lab for develop agile, safe, collaborative and competitive robots for tennis! Check out a great video and the accompanying paper below. Our project website can be found here.

Zulfiqar Zaidi*, Daniel Martin*, Nathaniel Belles, Viacheslav Zakharov, Arjun Krishna, Kin Man Lee, Peter Wagstaff, Sumedh Naik, Matthew Sklar, Sugju Choi, Yoshiki Kakehi, Ruturaj Patil, Divya Mallemadugula, Florian Pesce, Peter Wilson, Wendell Hom, Matan Diamond, Bryan Zhao, Nina Moorman, Rohan Paleja, Letian Chen, Esmaeil Seraj, and Matthew Gombolay (2022) | Athletic Mobile Manipulator System for Robotic Wheelchair Tennis | IEEE Robotics and Automation Letters, Volume 8, Issue 4, pages 2245-2252.